CRITICAL CYBERSECURITY ALERT

Date: November 2025 • Threat: CVE-2024-27564 (Server-Side Request Forgery)

Why it matters: Threat actors are abusing ChatGPT’s pictureproxy component to force internal HTTP requests, harvesting data and targeting US government organizations.

Threat researchers warn that CVE-2024-27564—a server-side request forgery (SSRF) flaw in OpenAI’s ChatGPT infrastructure—is being weaponized at scale. Veriti telemetry logged 10,479 attack attempts in a single week, underscoring that even “medium” severity weaknesses can deliver high-impact breaches when automation, AI, and misconfigured defenses collide.

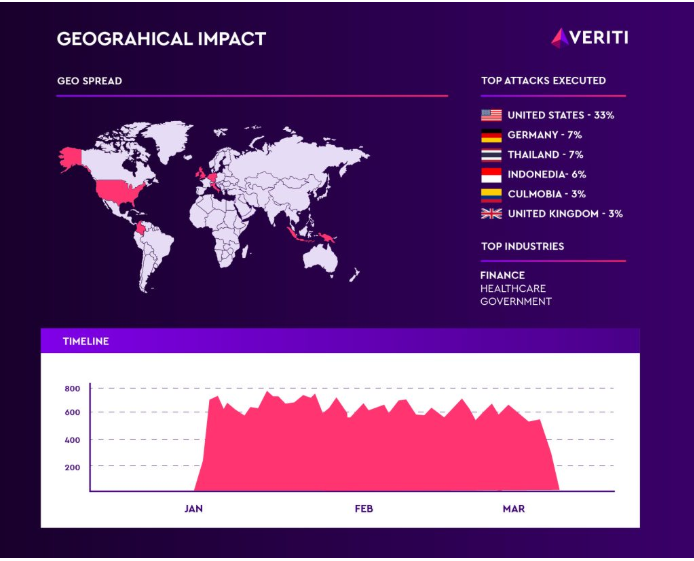

The coordinated campaigns heavily target the United States (33% of hits), followed by Germany and Thailand (7% each), with additional probes observed in Indonesia, Colombia, and the United Kingdom. Financial institutions and AI-driven service providers sit squarely in the crosshairs as adversaries co-opt ChatGPT to send malicious requests against protected APIs and internal services.

KEY TAKEAWAYS AT A GLANCE

Exploitation Metrics

- 10,479 attack attempts from a single malicious IP observed within one week.

- 33% of attacks target US organizations; Germany and Thailand each absorb 7%.

- Financial institutions named as primary victims due to heavy API usage.

- 35% of organizations remain exposed because of IPS, WAF, or firewall misconfigurations.

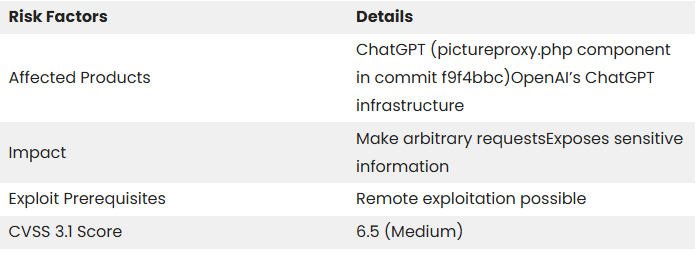

Vulnerability Snapshot (CVE-2024-27564)

HOW CVE-2024-27564 ENABLES SSRF

ChatGPT’s pictureproxy.php handler fetches remote images on behalf of users. By injecting crafted URLs into the url parameter, adversaries can redirect the service to internal endpoints, bypass perimeter controls, and siphon confidential responses. This mirrors the risks highlighted in recent AI-driven attack campaigns, such as the AI-powered cyber attacks analysis already affecting enterprise SOC teams.

Because the requests originate from trusted OpenAI infrastructure, downstream systems may treat the traffic as legitimate, magnifying the blast radius. The vulnerability is especially dangerous for institutions that expose administrative consoles or metadata services to internal-only networks without strict input validation.

GLOBAL CAMPAIGN INTELLIGENCE

Veriti’s investigation links the surge to coordinated botnets operating from more than 10,000 IP addresses. Attack volumes spiked sharply in January 2025 before tapering in February and March, suggesting either defensive improvements or a pivot to stealthier techniques. This pattern mirrors the escalation seen in large-scale breach campaigns like the AT&T data breach wave, where attackers rotated infrastructure after initial detection.

Financial-sector targets—including banks, fintech startups, and payment processors—face outsized risk. Compromised ChatGPT calls can expose transaction data, API secrets, or even authorize fraudulent transfers if downstream services trust requests from OpenAI domains.

- Patch & Validate: Apply OpenAI updates, review custom integrations, and enforce strict URL validation in any workflows invoking ChatGPT web actions.

- Harden Gateways: Ensure IPS, WAF, and firewalls block SSRF signatures associated with CVE-2024-27564. Over a third of organizations remain unprotected due to misconfigurations.

- Monitor & Segment: Inspect logs for abnormal requests originating from OpenAI infrastructure. Use network segmentation so that URL-fetching services cannot freely reach metadata endpoints or back-office systems.

- Threat Hunt: Incorporate AI/LLM attack scenarios into playbooks and leverage behavioral detection, as covered in our AI phishing surge alert.

DEFENSIVE PRIORITIES

WHAT ORGANIZATIONS SHOULD DO NOW

Security teams should immediately audit ChatGPT integrations, sanitize user-controlled URL parameters, and restrict outbound access from proxy components to only vetted destinations. Financial institutions, SaaS providers, and government agencies must assume the vulnerability has been probed and validate that no malicious redirects or forged API calls slipped through existing controls.

Because the campaign continues to evolve, maintain updated threat intelligence feeds and collaborate with cloud providers to evaluate indicators tied to the attack infrastructure. Long-term, adopt zero-trust principles around LLM-connected services to prevent cross-tenant abuse.

RELATED ARTICLES ON CYBERUPDATES365

- BREAKING: North Korean Hacker Groups Kimsuky and Lazarus Unveil Advanced Backdoor Tools

- BREAKING: AT&T Data Breach Affects 73 Million Customers – FBI Issues Alert

- AI Phishing Attacks Surge 300% in US – CISA Issues Emergency Alert

Stay Ahead of AI-Driven Threats

Subscribe to CyberUpdates365 for verified AI security alerts, deep-dive vulnerability coverage, and step-by-step defense strategies delivered weekly.

Educational use only. All statistics and campaign details verified through cited threat intelligence. Always follow responsible disclosure guidelines and coordinate with OpenAI before testing live systems.

Leave a Reply